NightCafe is a web site that provides AI image generation tools, and is primarily focused on the hobbyist market.

I’ve used it since December 2021, and know a handful of others who used it some months before that.

At that time, NightCafe offered two options: Style Transfer and ‘Artistic’, which is a form of VQGAN+CLIP.

Style Transfer takes an existing image or photo, and applies an existing art style to it from a number of preset options, though you can also upload your own. The options they provide on the site are classic works of art that are presently believed in good faith to be in the public domain.

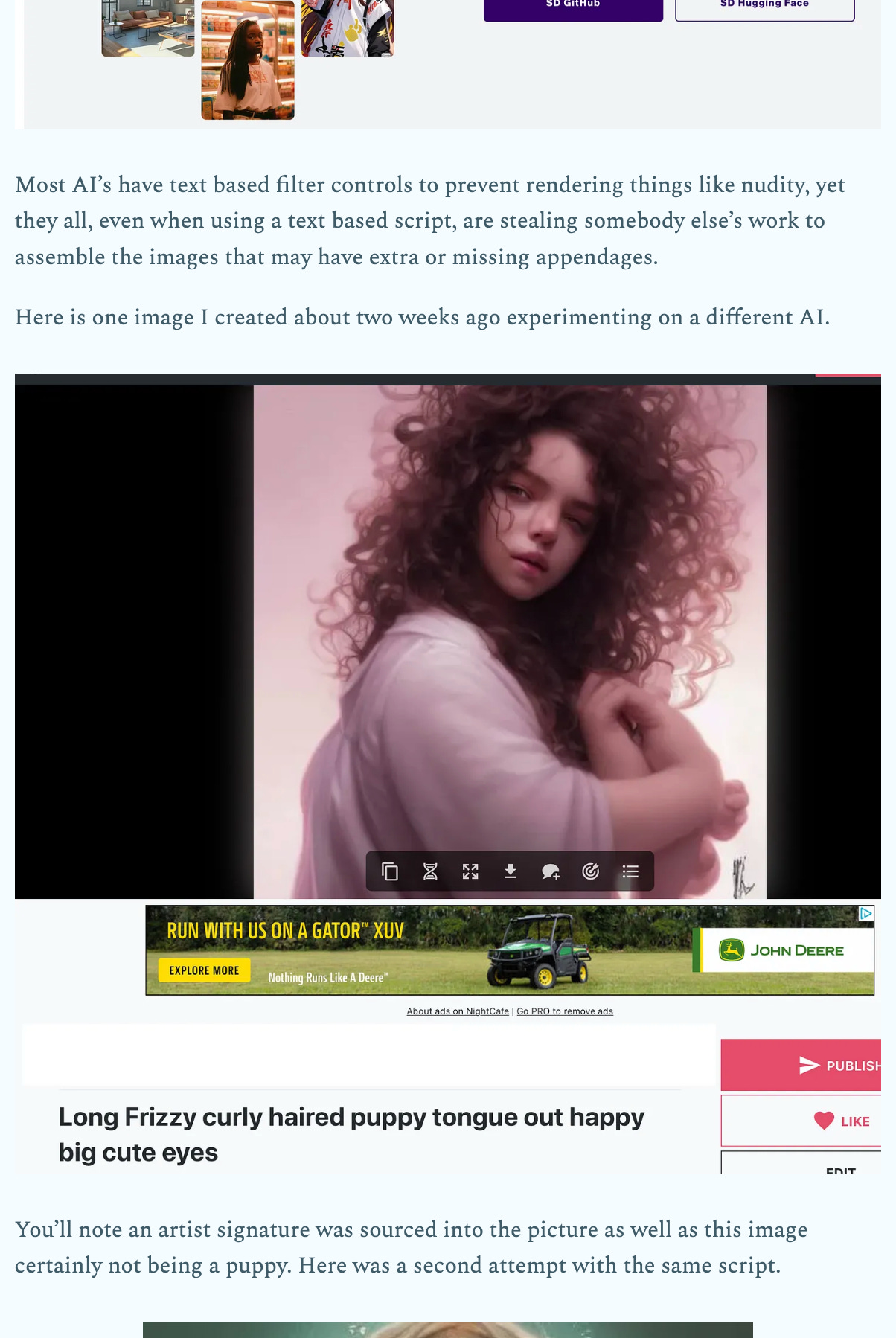

Here’s the final image:

It’s made by applying the artistic style of Vincent Van Gogh’s ‘Starry Night’ to an image I made in Midjourney of a sleeping cat. Here is the style image provided by NightCafe, and the source/start/init image I uploaded myself.

You can check out the link to the page on NightCafe here.

Here’s an example of ‘Artistic’ (VQGAN+CLIP).

You can find its page right here.

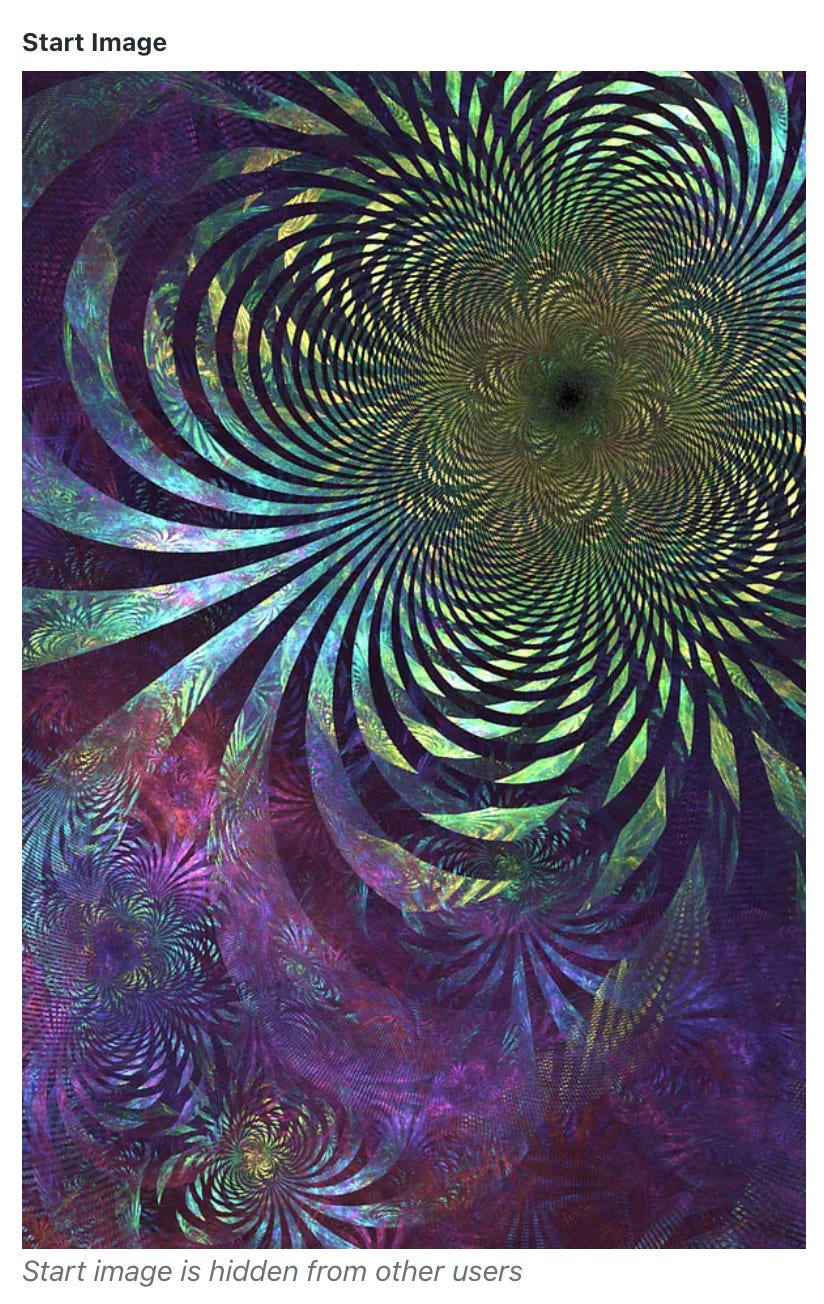

Now, I want you to scroll down the page and take a peek: it’ll tell you the prompt and source image — one was used for this piece but they aren’t required to use the Artistic model — are hidden.

It has been suggested that this indicates some kind of impropriety or something to hide, which, frankly, is nonsense.

From the time I started out, a number of people used their own sketches or artwork in combination with the Artistic model, and they wanted to protect those works from being cloned by other users without their permission.

This isn’t just a matter of someone snagging the image off the site and re-uploading it: the way NightCafe works is collaborative by design for those who wish to participate. The site allows one user to duplicate the work of another, provided the original creator has enabled this function, which they refer to as ‘evolve’.

If this feature is enabled, and the original work uses a source image, it allows the user legitimately using the evolve function to have access to the original creator’s source image as well.

Here’s my so very suspicious source/start/init image for the piece above:

…but, wait! That’s not shady or suspicious at all! What gives?

What gives is that it’s my original fractal art, and it’s for me to use, not everyone on NightCafe.

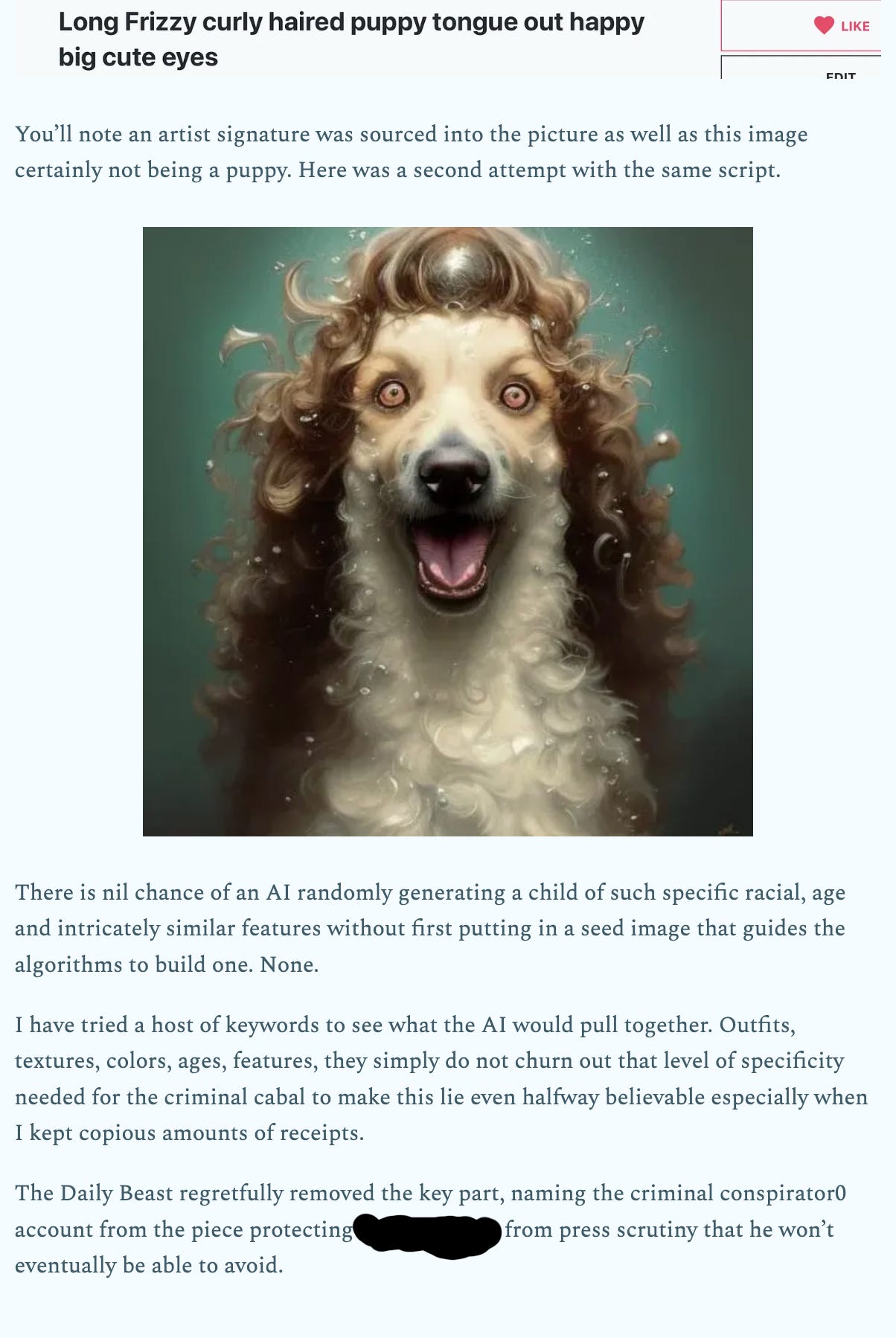

As you can clearly see, these models do not produce anything like the GAN image at issue in Steven Jarvis’ lies, despite the fact that he directs users to the NightCafe site to ‘prove’ the means and methods in use there are how it was made.

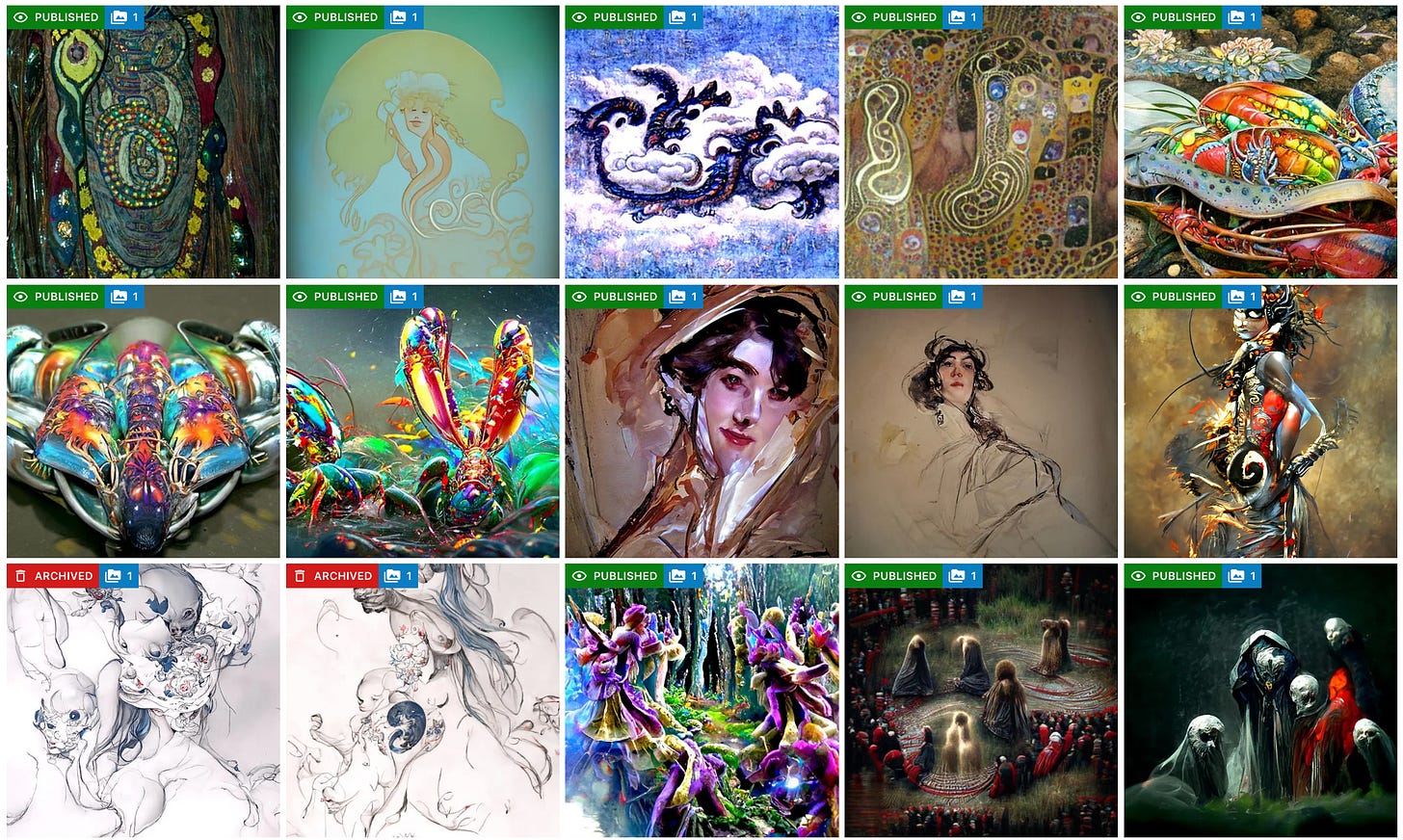

The next addition to the AI model lineup at NightCafe was their Coherent model, which was much more realistic and able to produce vaguely recognizable subjects for the most part, though these images were anything but photorealistic.

I wasn’t really a fan of Coherent, so I barely used it. It also would allow for source/start/init images, but it didn’t really help much in my case. Needless to say, this screenshot of my pile of Coherent images should amply demonstrate that this model was also just not at all up to creating the kind of image at issue.

(Read: they’re still not terribly coherent at all.)

It should be noted that these were the only models available on NightCafe in July 2022, when Steven Jarvis claims the image was created.

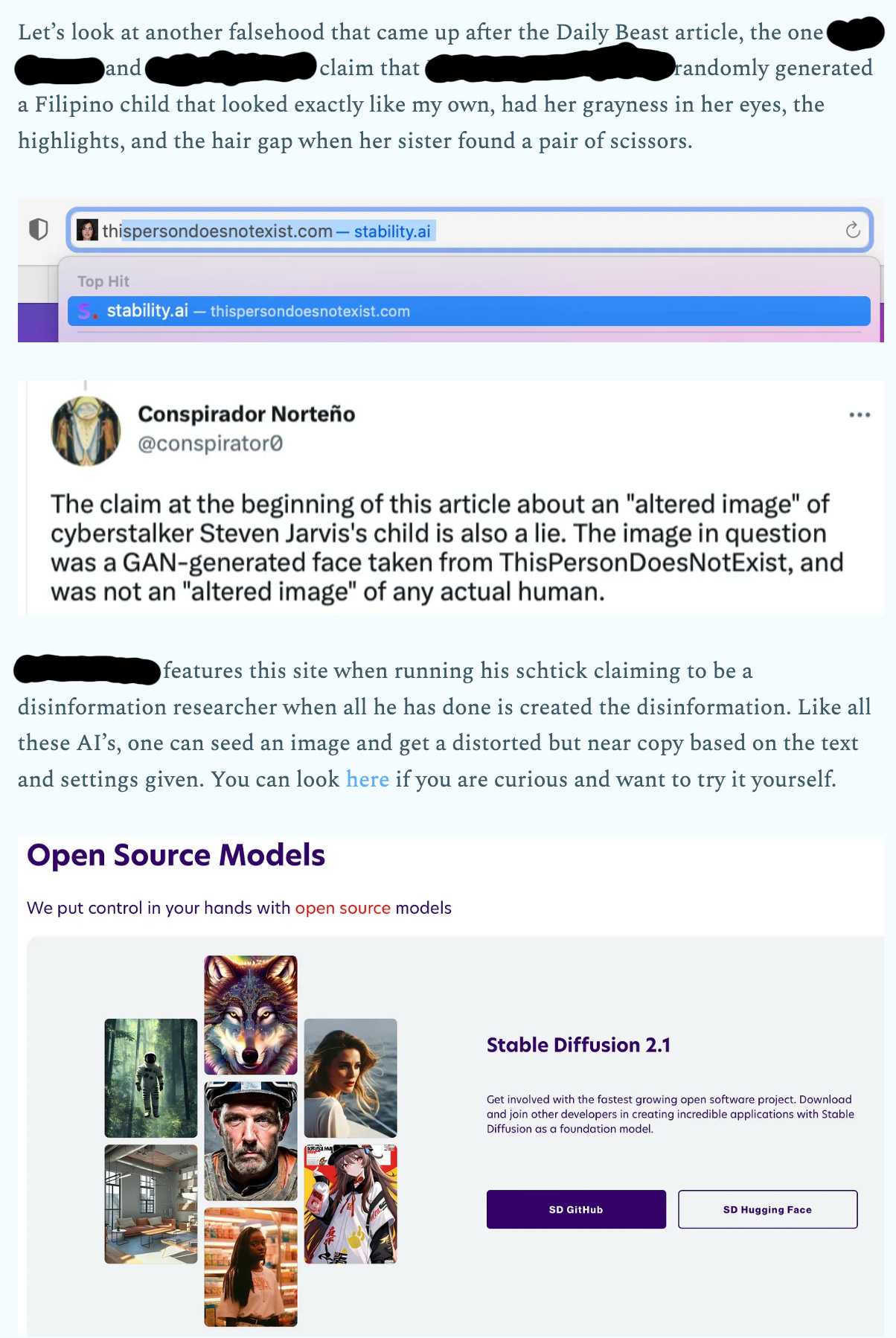

I would like to direct your attention to the claims printed in The Daily Beast sourced from Steven Jarvis:

The model he directly instructs his users to try to recreate the image on the NightCage site is StableDiffusion.

I could be nit-picky and say the model he’s listing — Stable Diffusion 2.1 — didn’t exist at the time, but that’s not the best part:

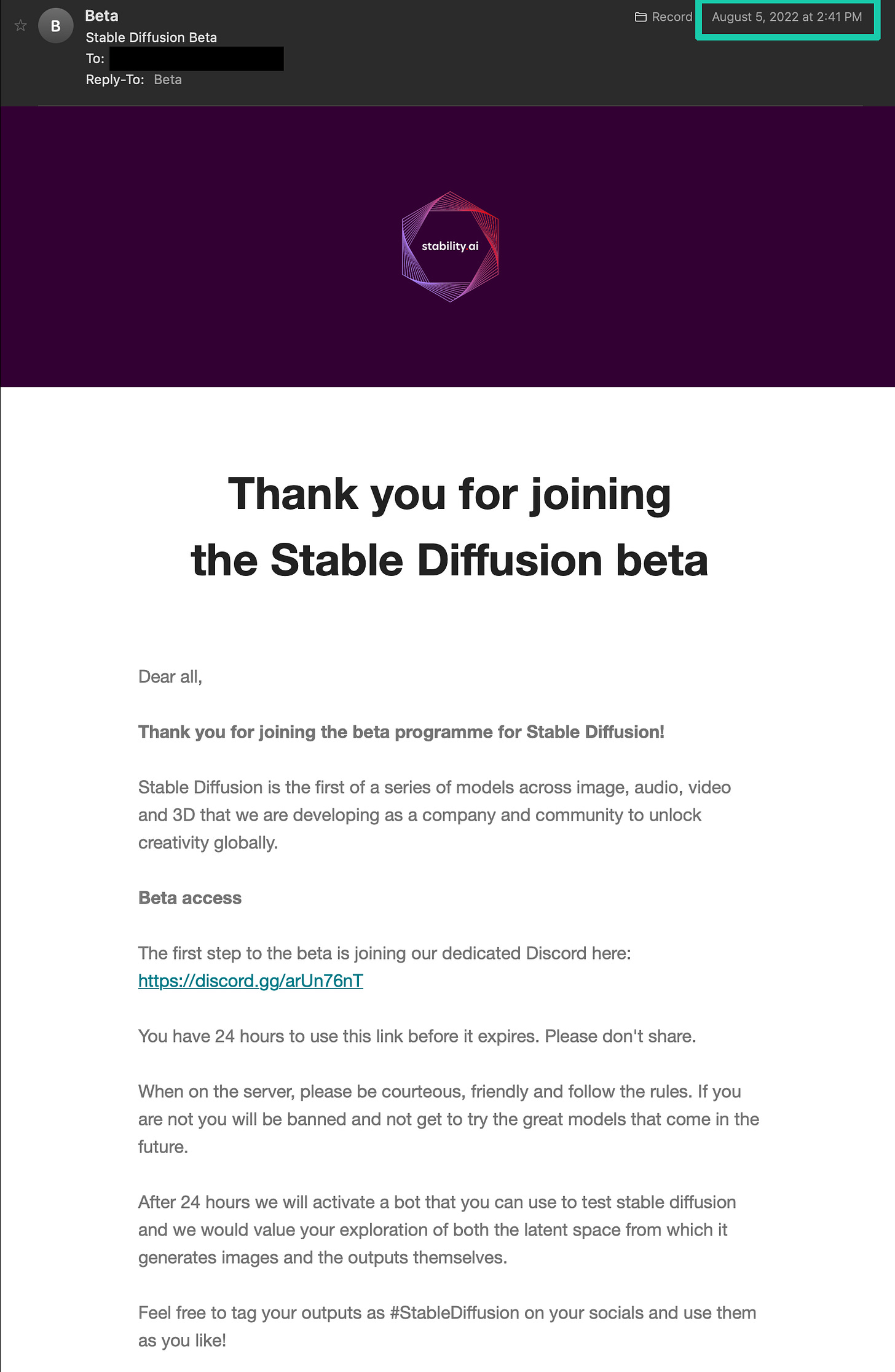

Yep, this is my initial beta signup request for access to the Stable Diffusion beta.

Take note of the highlighted date, a month after Steven Jarvis claims to have been targeted with the child GAN image.

That’s right: not only did Stable Diffusion 2.1 not even exist yet, but Stable Diffusion 1.4 — the first with a public access beta in any form — was not even available to those who had signed up for the beta test until a month later.

How could someone use a model that did not exist yet to do what he claims, let alone a much more advanced version of that model?

That’s right: they could not have done so.

Yet another detail that fact-checking would have clearly debunked if anybody had bothered enough to care.